Outside of that (and largely useless activities such as online gaming), I have tried to find time and motivation to work on some kind of creative activities. I'm trying to avoid diving into my large projects (such as Synergy) for a few reasons:

- it is mentally draining and involving to work on them, and I need to maintain my mental stamina for academic pursuits.

- I'm learning a lot of very pertinent programming methods in my study this semester. Methods which can apply directly to improving the operation of Synergy. As such, I want to wait until I have grasped this knowledge more fully before I apply it to my personal projects.

So my focus has been towards finding creative activities which can still contribute to the development of my overall skill set and ultimately the development of my portfolio of work, but are light-weight activities that I can pick up, work on for a set amount of time and then put down and return to my study.

Ultimately, this has lead me doing a lot more artistic activities, such as drawing. Nick introduced me to a very useful site called `Posemaniacs'. Head over there if you want to see what it's about. You don't want to? Fine, I'll explain: it is a collection of browser-embedded tools for human figure sketching and study. My link leads to the 30-second drawing section, which as you might suspect, involves the presentation of figures in poses for only 30 seconds at a time before changing, promoting rapid sketching and utmost line economy.

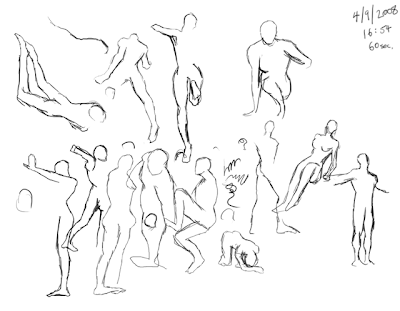

I've only attempted a couple of sessions, undertaking a more reasonable [for me] configuration of 60 seconds. Here's how they've turned out:

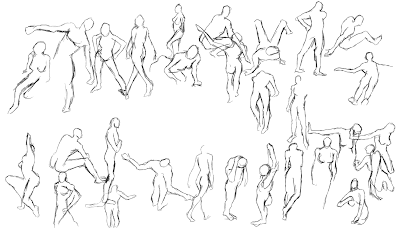

And a slightly less deplorable collection:

And a slightly less deplorable collection:

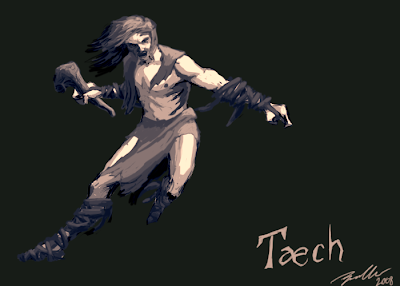

I also partook in a competition over at GeekFanGirl, where entrants had to either draw a scene or character from an active role play, or write short story about it/them. Turns out, I was the only one to do an art entry. I drew one of the main NPCs from my own RP I GM, the wild druid, Taech:

There was a deadline for entries, and mine made it with 40 seconds to spare. To put it another way, this entry was largely rushed. This was a positive thing, in my mind, because I learned a lot about being economical with my work flow. I'm irritated with a lot of things in the piece though, such as the messed up lines and artifacts around the silhouette, the skull structure being a complete deviation from what I wanted, and the completely schizophrenic rendering styles across the image.

Still, I'm pretty proud of the results, either way. I refuse to alter it, leaving it as a record of what I could accomplish in the time I had for working on it.

So that's about it. I'm hoping to make the 60 second sketches a regular, if not daily activity. If I can manage that, then I believe I am well on my way to becoming a competent artist.